publications

2024

-

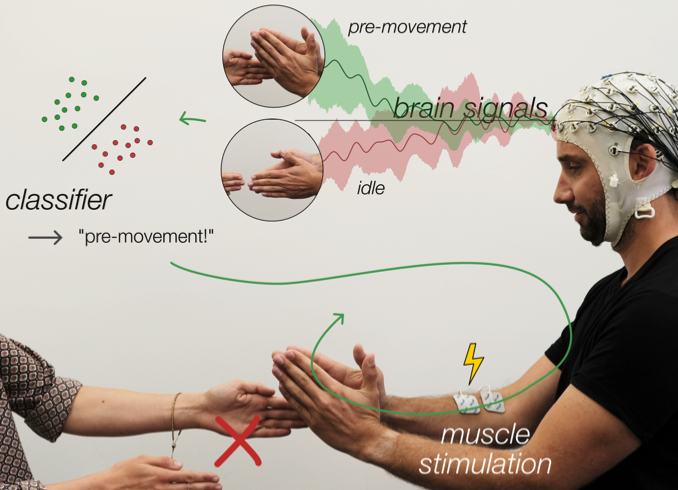

Sense of Agency in Closed-loop Muscle StimulationLukas Gehrke, Leonie Terfurth, and Klaus Gramann2024

Sense of Agency in Closed-loop Muscle StimulationLukas Gehrke, Leonie Terfurth, and Klaus Gramann2024 -

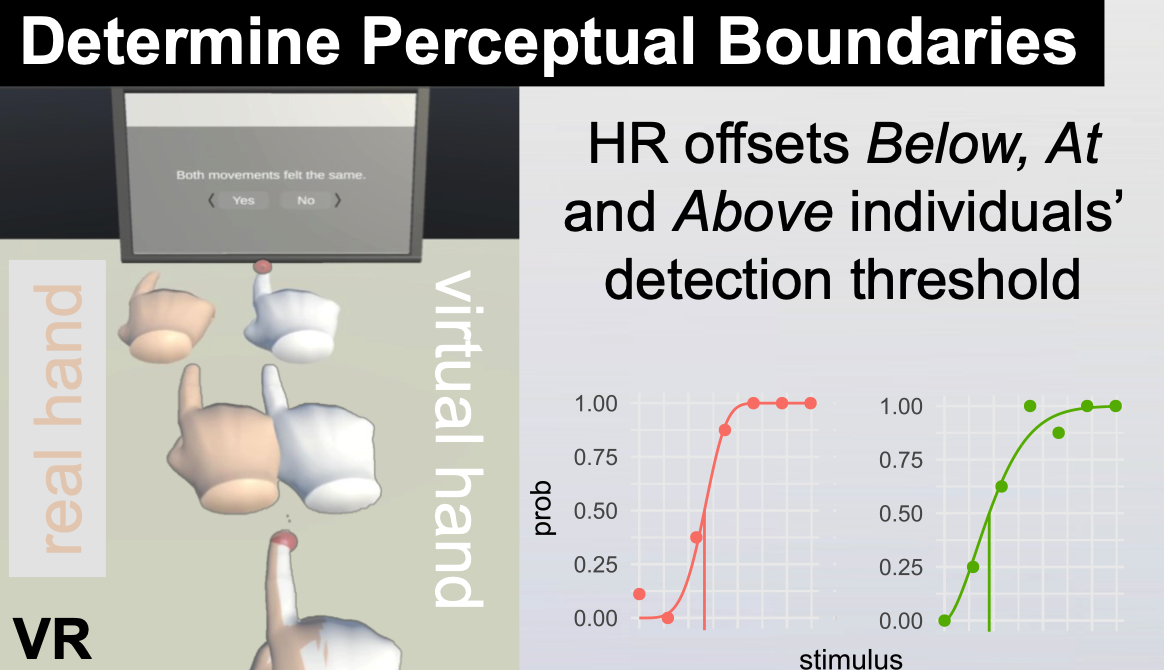

Predicting the limits: Tailoring unnoticeable hand redirection offsets in virtual reality to individuals’ perceptual boundariesMartin Feick, Kora Persephone Regitz, Lukas Gehrke, and 5 more authorsIn Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology , Oct 2024

Predicting the limits: Tailoring unnoticeable hand redirection offsets in virtual reality to individuals’ perceptual boundariesMartin Feick, Kora Persephone Regitz, Lukas Gehrke, and 5 more authorsIn Proceedings of the 37th Annual ACM Symposium on User Interface Software and Technology , Oct 2024Many illusion and interaction techniques in Virtual Reality (VR) rely on Hand Redirection (HR), which has proved to be effective as long as the introduced offsets between the position of the real and virtual hand do not noticeably disturb the user experience. Yet calibrating HR offsets is a tedious and time-consuming process involving psychophysical experimentation, and the resulting thresholds are known to be affected by many variables—limiting HR’s practical utility. As a result, there is a clear need for alternative methods that allow tailoring HR to the perceptual boundaries of individual users. We conducted an experiment with 18 participants combining movement, eye gaze and EEG data to detect HR offsets Below, At, and Above individuals’ detection thresholds. Our results suggest that we can distinguish HR At and Above from no HR. Our exploration provides a promising new direction with potentially strong implications for the broad field of VR illusions.

-

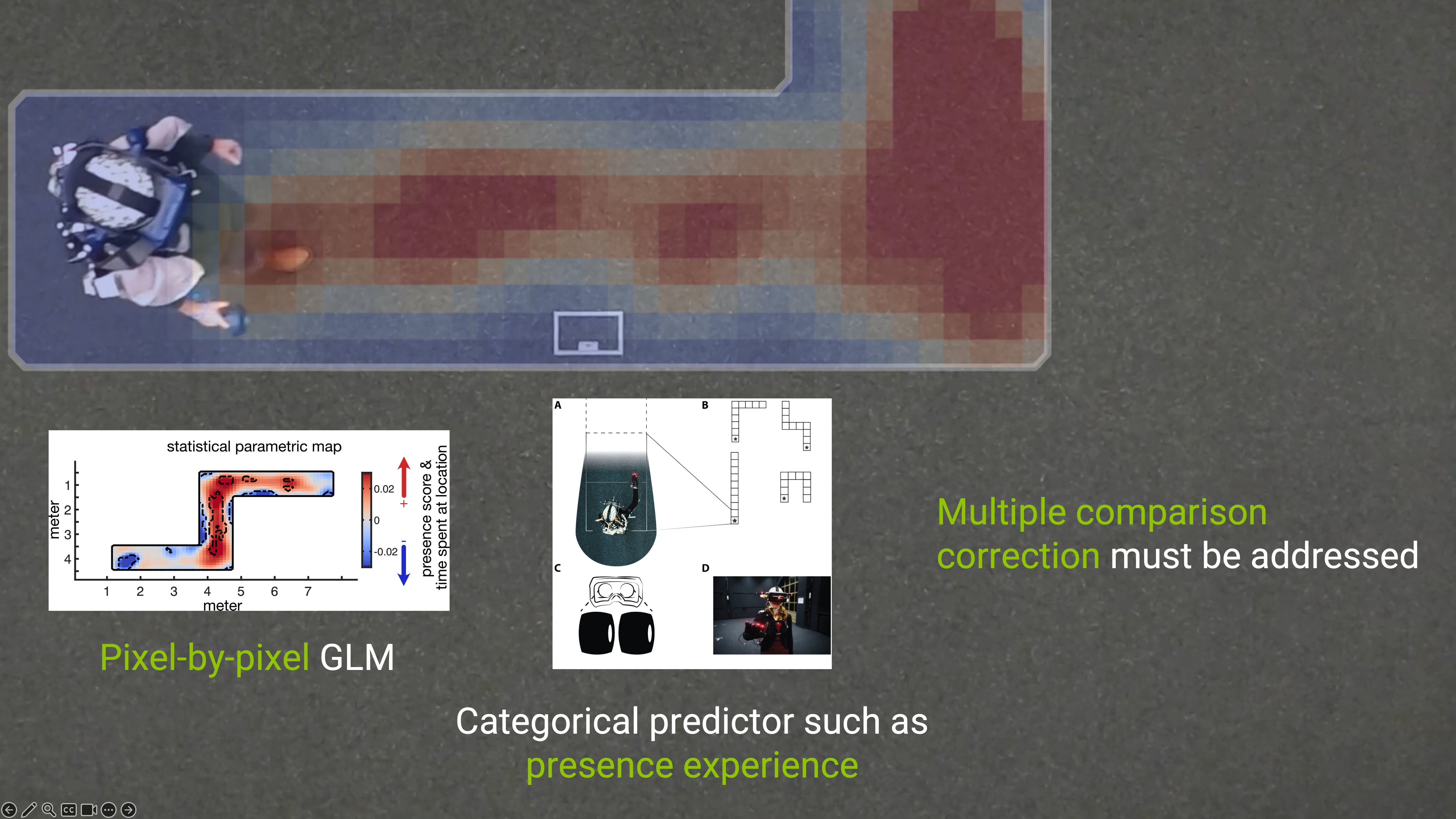

Exposing movement correlates of presence experience in virtual reality using parametric mapsLukas Gehrke, and Klaus GramannIn 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) , Mar 2024

Exposing movement correlates of presence experience in virtual reality using parametric mapsLukas Gehrke, and Klaus GramannIn 2024 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW) , Mar 2024Genuine experiences where users feel a deep level of connection are the key quality of room-scale virtual reality (VR). The freedom to move promises natural sensory experiences stimulating a feeling of presence. However, users differ in their eagerness to move, some prefer movement by teleportation while others would keep walking forever. Such individual differences challenge the inclusive design necessary for bestseller applications. In this methodological research contribution, we propose to study user behavior and experience using parametric maps based on general linear models (GLM) to overcome limitations of traditional data aggregation techniques. In the investigated study, participants explored invisible mazes touching hidden walls for brief moments of visual guidance. We demonstrate that experienced presence correlated with where participants spent time exploring the VR. We found an increase in presence coinciding with participants being less likely to collide with invisible walls and spending more time in segments critical for navigational success.

-

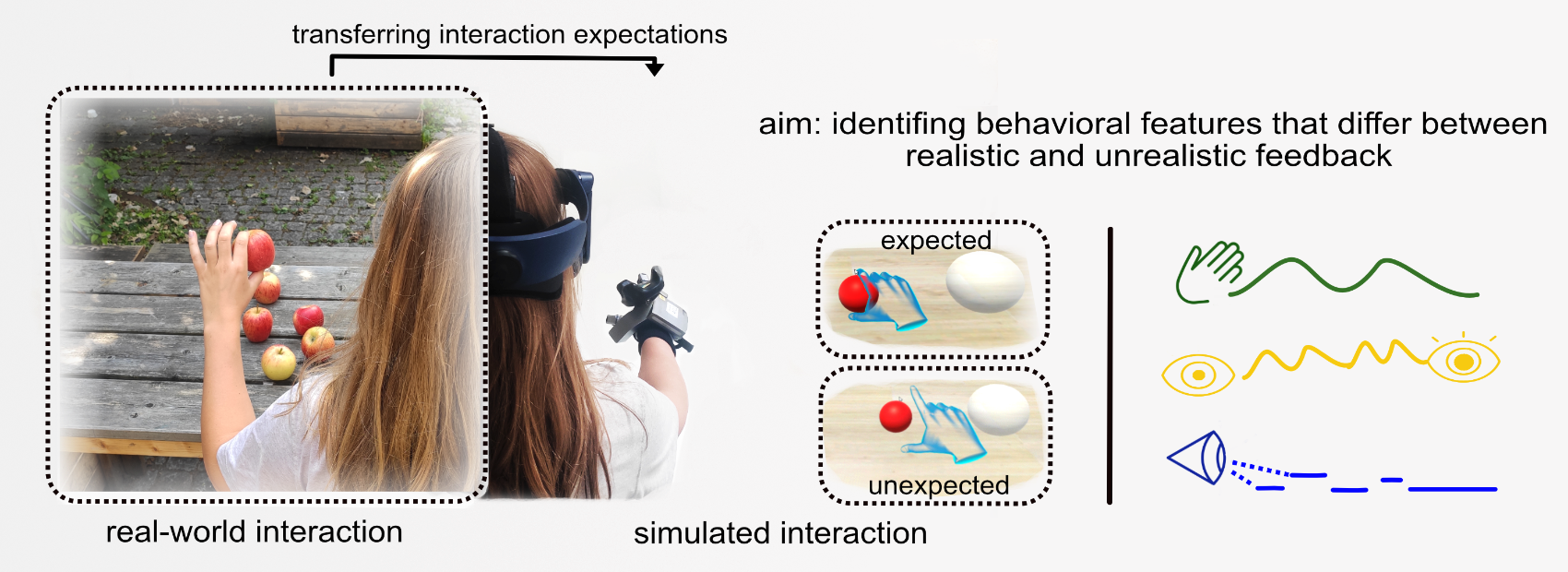

Decoding Realism of Virtual Objects: Exploring Behavioral and Ocular Reactions to Inaccurate Interaction FeedbackLeonie Terfurth, Klaus Gramann, and Lukas GehrkeACM Trans. Comput.-Hum. Interact., Apr 2024

Decoding Realism of Virtual Objects: Exploring Behavioral and Ocular Reactions to Inaccurate Interaction FeedbackLeonie Terfurth, Klaus Gramann, and Lukas GehrkeACM Trans. Comput.-Hum. Interact., Apr 2024Achieving temporal synchrony between sensory modalities is crucial for natural perception of object interaction in virtual reality. While subjective questionnaires are currently used to evaluate users’ VR experiences, leveraging behavior and psychophysiological responses can provide additional insights.We investigated motion and ocular behavior as discriminators between realistic and unrealistic object interactions. Participants grasped and placed a virtual object while experiencing sensory feedback that either matched their expectations or occurred too early. We also explored visual-only feedback vs. combined visual and haptic feedback. Due to technological limitations, a condition with delayed feedback was added post-hoc.Gaze-based metrics revealed discrimination between high and low feedback realism. Increased interaction uncertainty was associated with longer fixations on the avatar hand and temporal shifts in the gaze-action relationship. Our findings enable real-time evaluation of users’ perception of realism in interactions. They facilitate the optimization of interaction realism in virtual environments and beyond.

2023

-

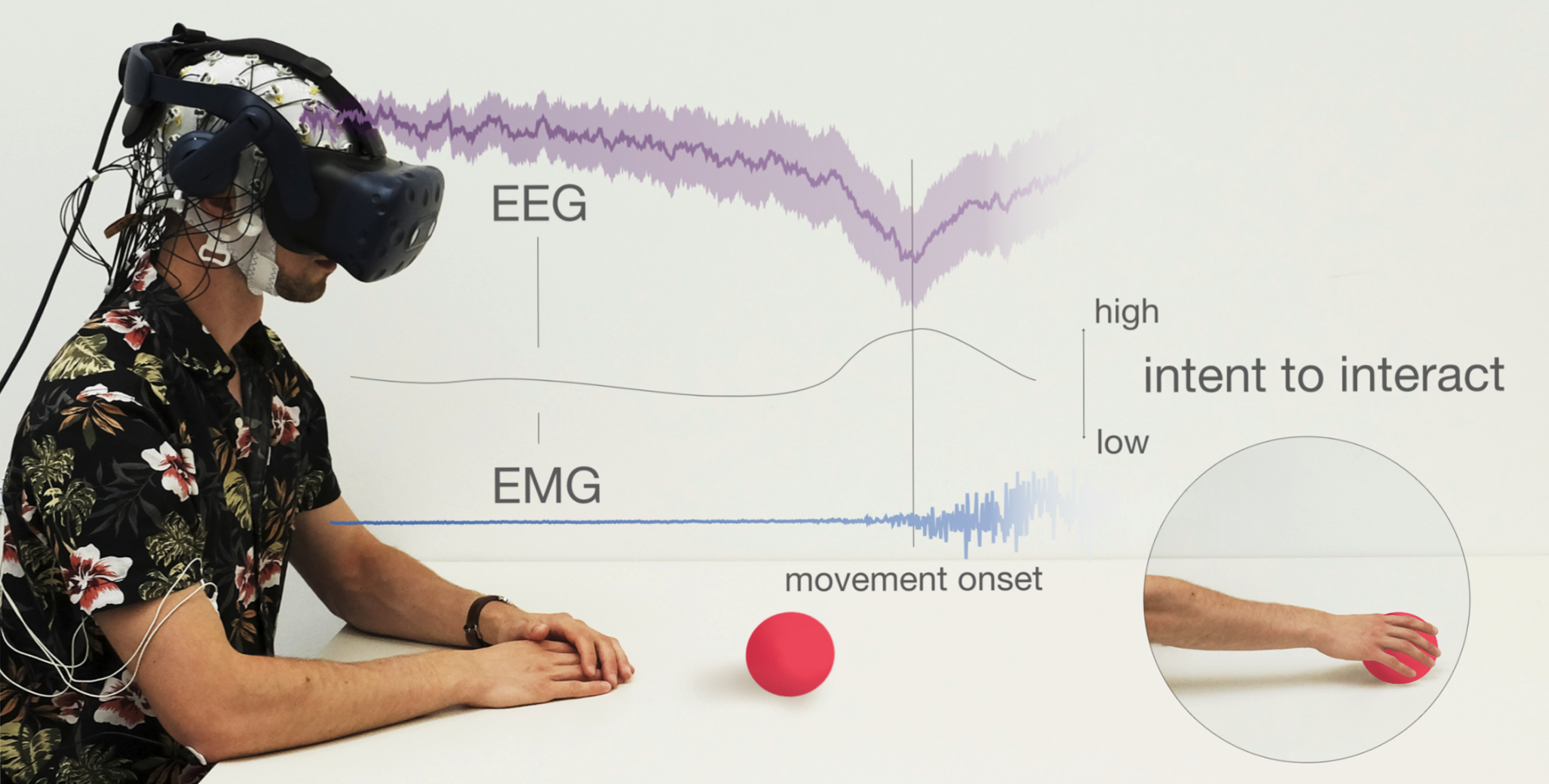

Modeling the Intent to Interact with VR using Physiological FeaturesWilly Nguyen, Klaus Gramann, and Lukas GehrkeIEEE Transactions on Visualization and Computer Graphics, Apr 2023

Modeling the Intent to Interact with VR using Physiological FeaturesWilly Nguyen, Klaus Gramann, and Lukas GehrkeIEEE Transactions on Visualization and Computer Graphics, Apr 2023Abstract— Objective. Mixed-Reality (XR) technologies promise a user experience (UX) that rivals the interactive experience with the real-world. The key facilitators in the design of such a natural UX are that the interaction has zero lag and that users experience no excess mental load. This is difficult to achieve due to technical constraints such as motion-to-photon latency as well as false-positives during gesture-based interaction. Methods. In this paper, we explored the use of physiological features to model the user’s intent to interact with a virtual reality (VR) environment. Accurate predictions about when users want to express an interaction intent could overcome the limitations of an interactive device that lags behind the intention of a user. We computed time-domain features from electroencephalography (EEG) and electromyography (EMG) recordings during a grab-and-drop task in VR and cross-validated a Linear Discriminant Analysis (LDA) for three different combinations of (1) EEG, (2) EMG and (3) EEG-EMG features. Results & Conclusion. We found the classifiers to detect the presence of a pre-movement state from background idle activity reflecting the users’ intent to interact with the virtual objects (EEG: 62% ± 10%, EMG: 72% ± 9%, EEG-EMG: 69% ± 10%) above simulated chance level. The features leveraged in our classification scheme have a low computational cost and are especially useful for fast decoding of users’ mental states. Our work is a further step towards a useful classification of users’ intent to interact, as a high temporal resolution and speed of detection is crucial. This facilitates natural experiences through zero-lag adaptive interfaces.

-

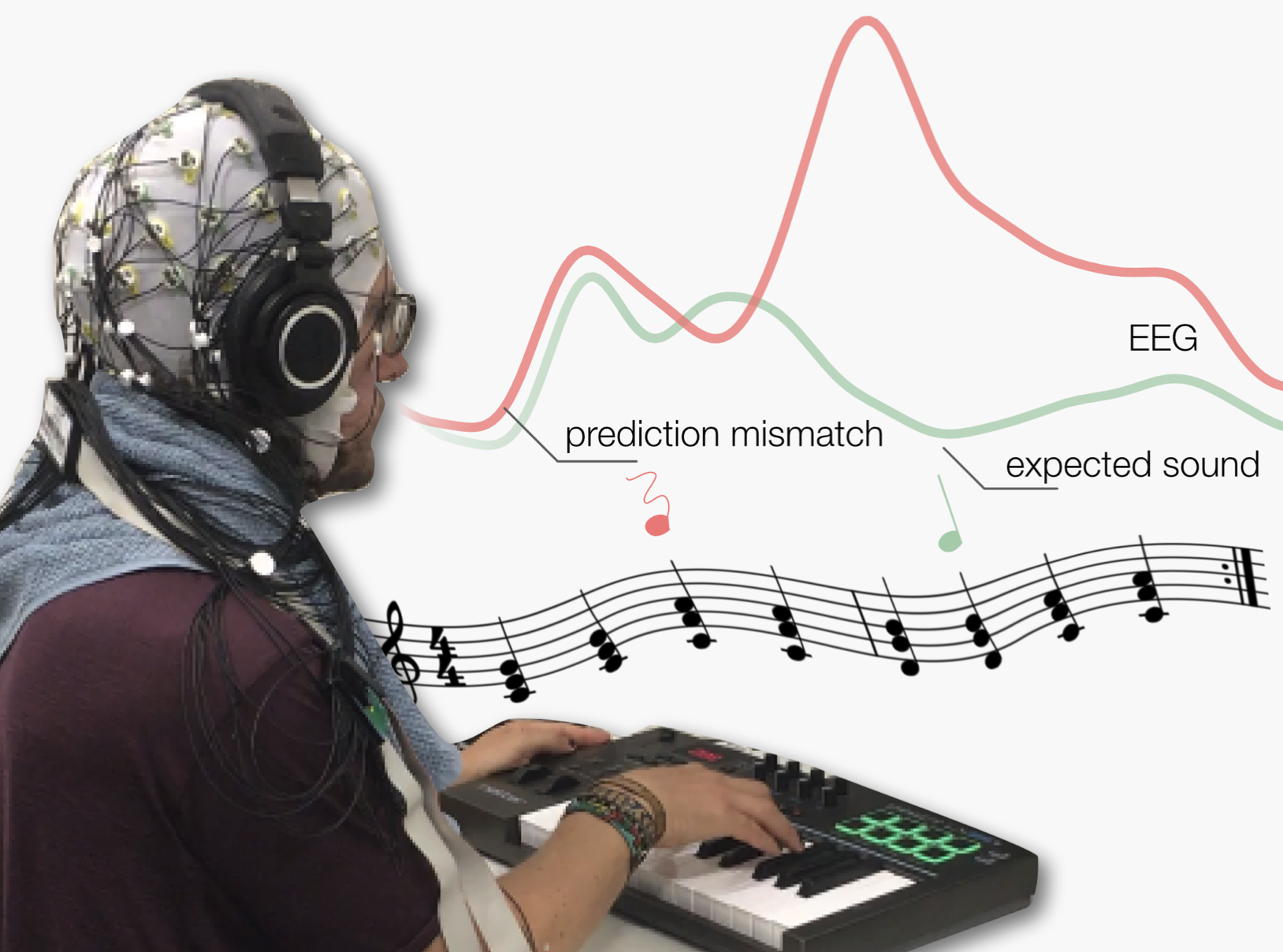

Towards an Implicit Metric of Sensory-Motor Accuracy: Brain Responses to Auditory Prediction Errors in PianistsElisabeth Pangratz, Francesco Chiossi, Steeven Villa, and 2 more authorsIn Proceedings of the 15th Conference on Creativity and Cognition , Apr 2023

Towards an Implicit Metric of Sensory-Motor Accuracy: Brain Responses to Auditory Prediction Errors in PianistsElisabeth Pangratz, Francesco Chiossi, Steeven Villa, and 2 more authorsIn Proceedings of the 15th Conference on Creativity and Cognition , Apr 2023During listening to music, the brain expects specific acoustic events based on learned musical rules. During music performance expectancy is additionally created based on motor action by linking keypresses to their sounds. We investigated EEG (Electroencephalography) signals to auditory expectancy violations in piano performance and perception. In our study, pianists experienced manipulations of different acoustic features, such as pitch and loudness, during playing and listening to piano sequences. We found that manipulations during performance elicited deflections with stronger amplitudes compared to manipulations during perception indicating that the action of producing sounds strengthens auditory expectancy. Loudness manipulations, violating musical regularity, elicited deflections with smaller latencies compared to pitch manipulations, which violate harmonic expectancy, suggesting that the brain processes expectancy violations of distinct acoustic features in a different way. These EEG signatures may prove useful for applications in intelligent music interfaces by providing information about sensory-motor accuracy.

2022

-

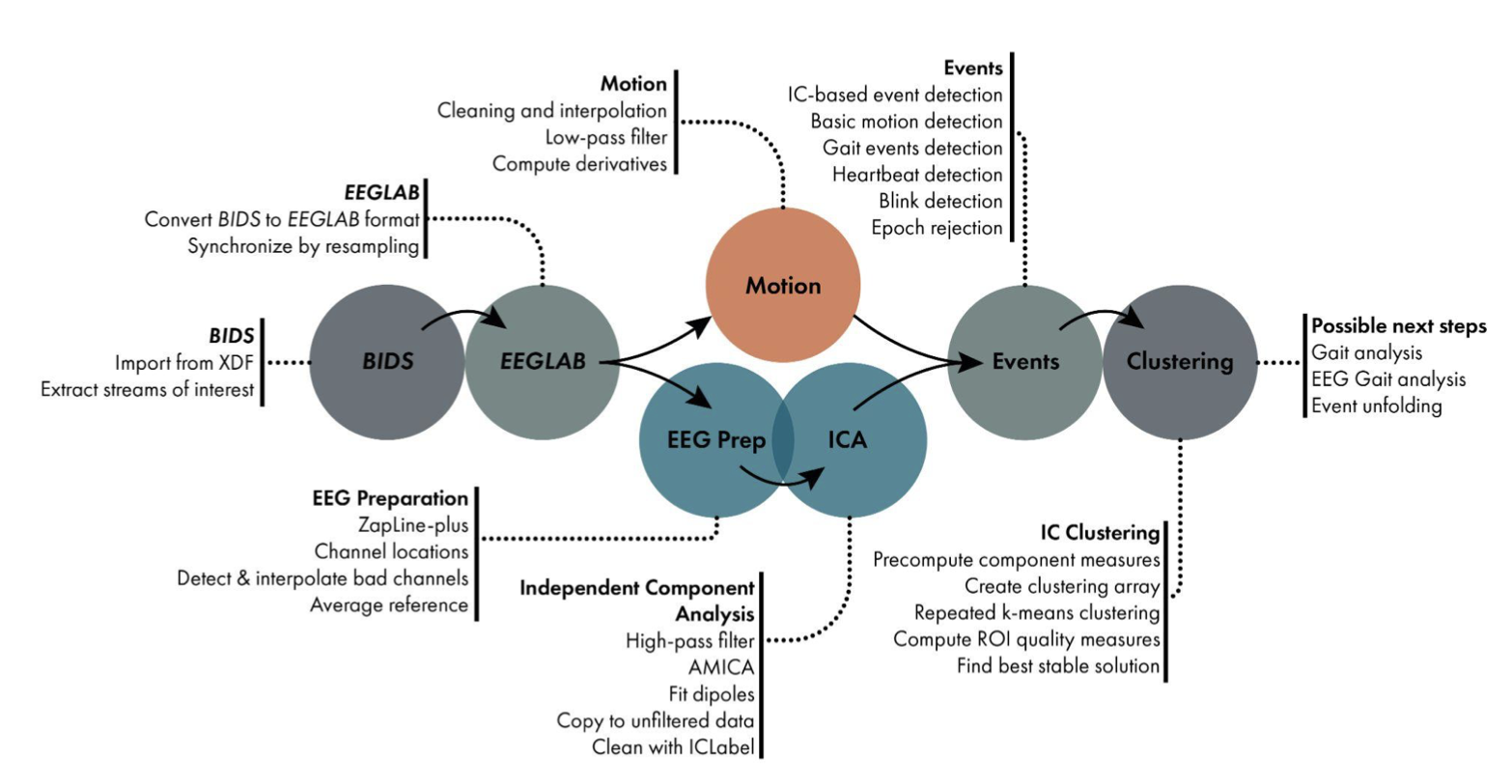

The BeMoBIL Pipeline for automated analyses of multimodal mobile brain and body imaging dataMarius Klug, Sein Jeung, Anna Wunderlich, and 6 more authorsbioRxiv, Apr 2022

The BeMoBIL Pipeline for automated analyses of multimodal mobile brain and body imaging dataMarius Klug, Sein Jeung, Anna Wunderlich, and 6 more authorsbioRxiv, Apr 2022Advancements in hardware technology and analysis methods allow more and more mobility in electroencephalography (EEG) experiments. Mobile Brain/Body Imaging (MoBI) studies may record various types of data such as motion or eye tracking in addition to neural activity. Although there are options available to analyze EEG data in a standardized way, they do not fully cover complex multimodal data from mobile experiments. We thus propose the BeMoBIL Pipeline, an easy-to-use pipeline in MATLAB that supports the time-synchronized handling of multimodal data. It is based on EEGLAB and fieldtrip and consists of automated functions for EEG preprocessing and subsequent source separation. It also provides functions for motion data processing and extraction of event markers from different data modalities, including the extraction of eye-movement and gait-related events from EEG using independent component analysis. The pipeline introduces a new robust method for region-of-interest-based group-level clustering of independent EEG components. Finally, the BeMoBIL Pipeline provides analytical visualizations at various processing steps, keeping the analysis transparent and allowing for quality checks of the resulting outcomes. All parameters and steps are documented within the data structure and can be fully replicated using the same scripts. This pipeline makes the processing and analysis of (mobile) EEG and body data more reliable and independent of the prior experience of the individual researchers, thus facilitating the use of EEG in general and MoBI in particular. It is an open-source project available for download at https://github.com/BeMoBIL/bemobil-pipeline which allows for community-driven adaptations in the future.

-

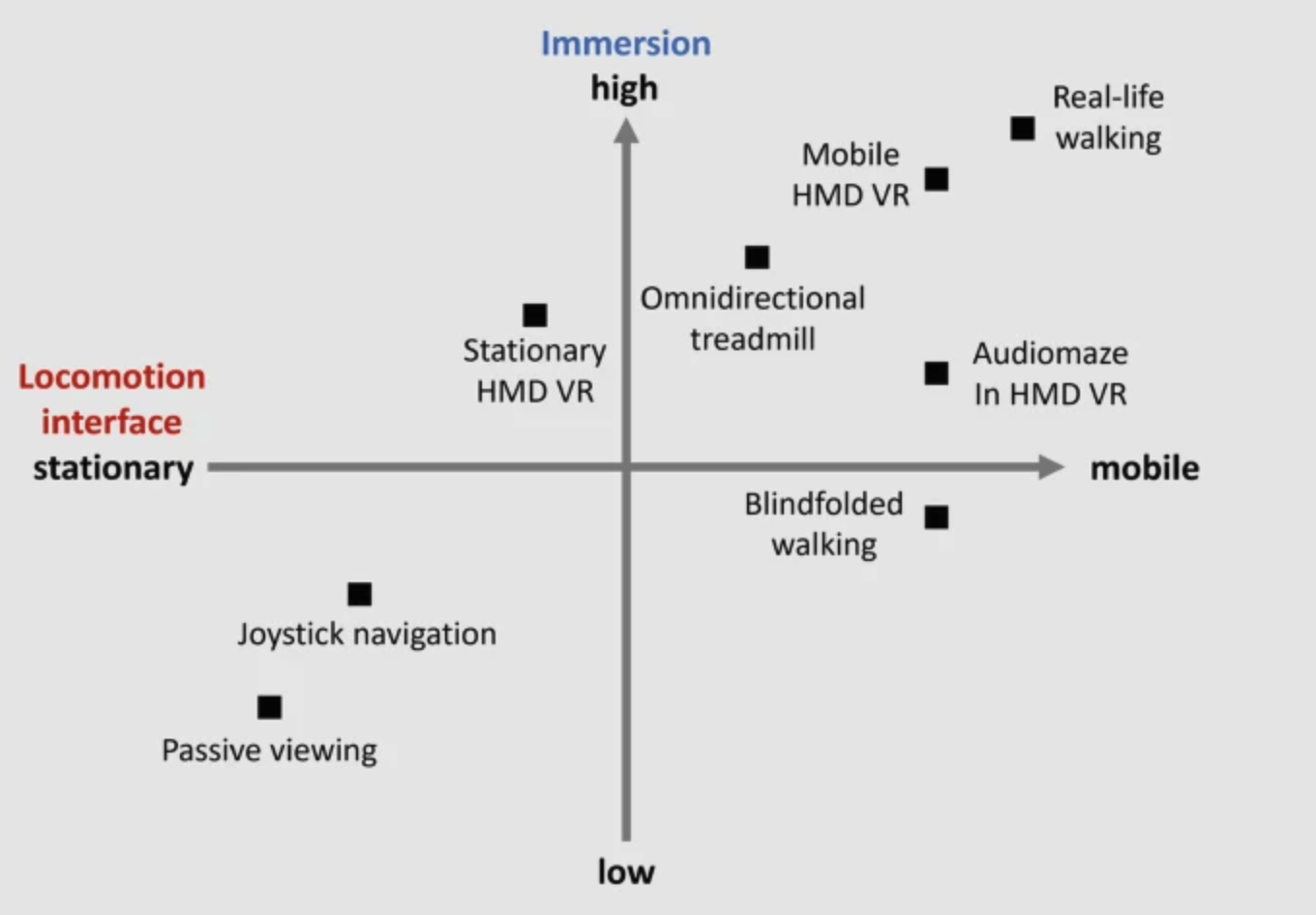

Virtual reality for spatial navigationSein Jeung, Christopher Hilton, Timotheus Berg, and 2 more authorsIn , Apr 2022

Virtual reality for spatial navigationSein Jeung, Christopher Hilton, Timotheus Berg, and 2 more authorsIn , Apr 2022Immersive virtual reality (VR) allows its users to experience physical space in a non-physical world. It has developed into a powerful research tool to investigate the neural basis of human spatial navigation as an embodied experience. The task of wayfinding can be carried out by using a wide range of strategies, leading to the recruitment of various sensory modalities and brain areas in real-life scenarios. While traditional desktop-based VR setups primarily focus on vision-based navigation, immersive VR setups, especially mobile variants, can efficiently account for motor processes that constitute locomotion in the physical world, such as head-turning and walking. When used in combination with mobile neuroimaging methods, immersive VR affords a natural mode of locomotion and high immersion in experimental settings, designing an embodied spatial experience. This in turn facilitates ecologically valid investigation of the neural underpinnings of spatial navigation.

-

Neural sources of prediction errors detect unrealistic VR interactionsLukas Gehrke, Pedro Lopes, Marius Klug, and 2 more authorsJournal of Neural Engineering, Apr 2022

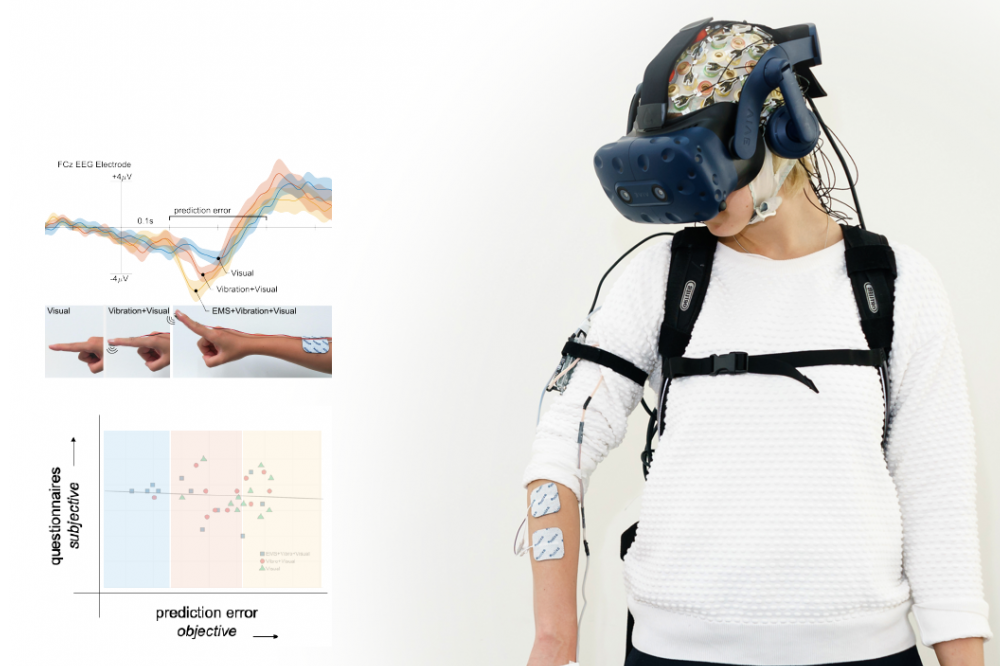

Neural sources of prediction errors detect unrealistic VR interactionsLukas Gehrke, Pedro Lopes, Marius Klug, and 2 more authorsJournal of Neural Engineering, Apr 2022Objective. Neural interfaces hold significant promise to implicitly track user experience. Their application in virtual and augmented reality (VR/AR) simulations is especially favorable as it allows user assessment without breaking the immersive experience. In VR, designing immersion is one key challenge. Subjective questionnaires are the established metrics to assess the effectiveness of immersive VR simulations. However, administering such questionnaires requires breaking the immersive experience they are supposed to assess. Approach. We present a complimentary metric based on a event-related potentials. For the metric to be robust, the neural signal employed must be reliable. Hence, it is beneficial to target the neural signal’s cortical origin directly, efficiently separating signal from noise. To test this new complementary metric, we designed a reach-to-tap paradigm in VR to probe electroencephalography (EEG) and movement adaptation to visuo-haptic glitches. Our working hypothesis was, that these glitches, or violations of the predicted action outcome, may indicate a disrupted user experience. Main results. Using prediction error negativity features, we classified VR glitches with 77% accuracy. We localized the EEG sources driving the classification and found midline cingulate EEG sources and a distributed network of parieto-occipital EEG sources to enable the classification success. Significance. Prediction error signatures from these sources reflect violations of user’s predictions during interaction with AR/VR, promising a robust and targeted marker for adaptive user interfaces.

-

Toward Human Augmentation Using Neural Fingerprints of AffordancesLukas Gehrke, Pedro Lopes, and Klaus GramannIn Affordances in Everyday Life: A Multidisciplinary Collection of Essays , Apr 2022

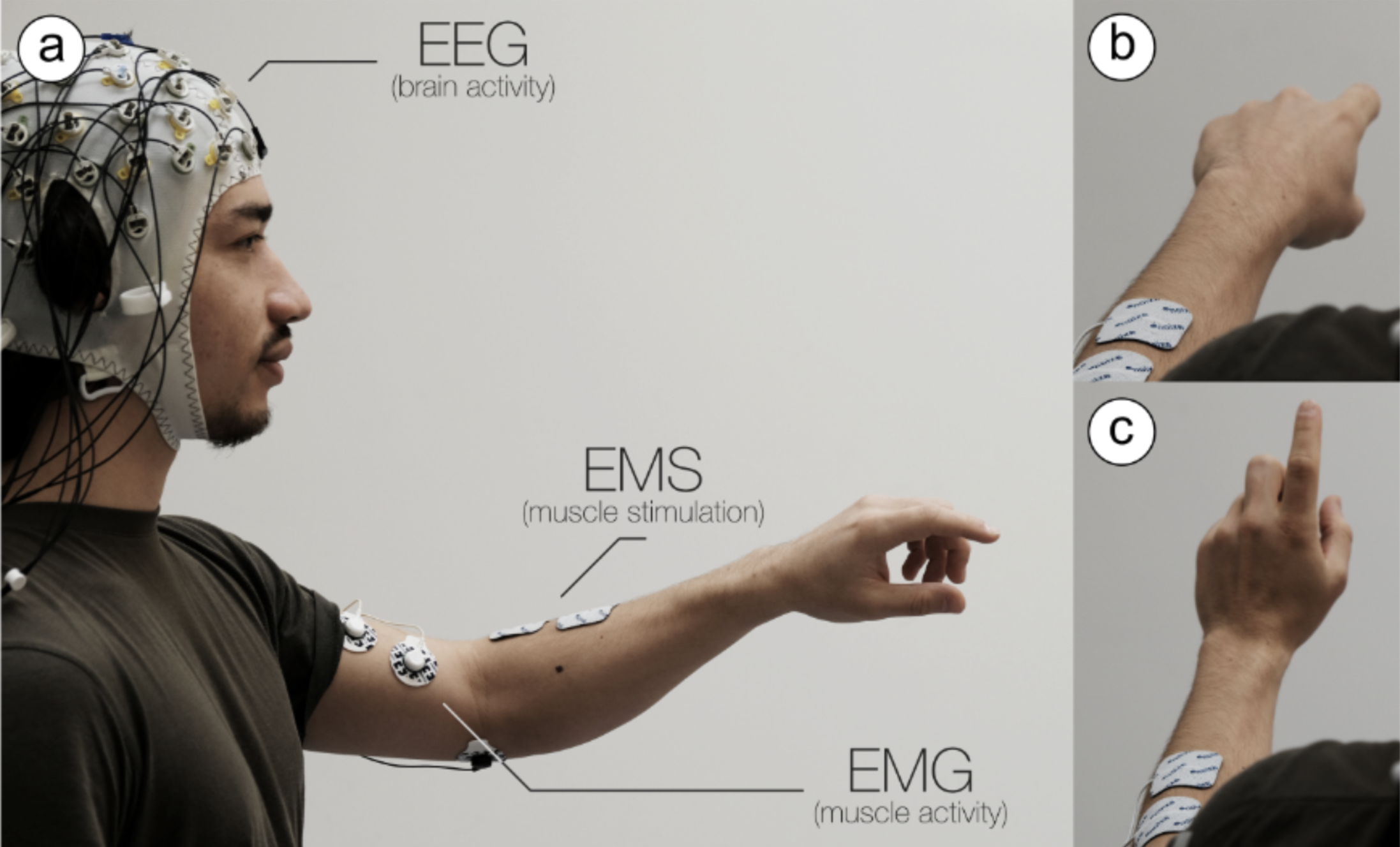

Toward Human Augmentation Using Neural Fingerprints of AffordancesLukas Gehrke, Pedro Lopes, and Klaus GramannIn Affordances in Everyday Life: A Multidisciplinary Collection of Essays , Apr 2022In our increasingly complex world, many objects in our environment do not readily afford their intended use case. From a designer’s viewpoint, the challenge then is to design for easily perceived utility. In this essay, we discuss a system to implement affordances on the user directly. We present the idea to leverage brain activity and actuation hardware to design the emergence of affordances. Our proposed system (1) tracks a user’s readiness for action in near real time through the electroencephalogram (EEG) and (2) implements affordances by cueing and physically moving the user’s body using haptic devices that can directly actuate the user’s muscles, such as motor-based exoskeletons or electrical muscle stimulation.

2021

-

Human cortical dynamics during full-body heading changesKlaus Gramann, Friederike U Hohlefeld, Lukas Gehrke, and 1 more authorScientific Reports, Apr 2021

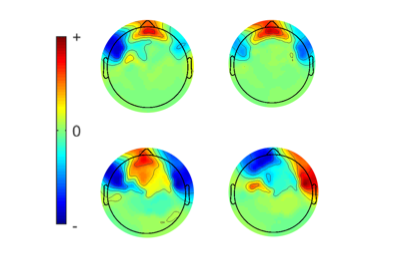

Human cortical dynamics during full-body heading changesKlaus Gramann, Friederike U Hohlefeld, Lukas Gehrke, and 1 more authorScientific Reports, Apr 2021The retrosplenial complex (RSC) plays a crucial role in spatial orientation by computing heading direction and translating between distinct spatial reference frames based on multi-sensory information. While invasive studies allow investigating heading computation in moving animals, established non-invasive analyses of human brain dynamics are restricted to stationary setups. To investigate the role of the RSC in heading computation of actively moving humans, we used a Mobile Brain/Body Imaging approach synchronizing electroencephalography with motion capture and virtual reality. Data from physically rotating participants were contrasted with rotations based only on visual flow. During physical rotation, varying rotation velocities were accompanied by pronounced wide frequency band synchronization in RSC, the parietal and occipital cortices. In contrast, the visual flow rotation condition was associated with pronounced alpha band desynchronization, replicating previous findings in desktop navigation studies, and notably absent during physical rotation. These results suggest an involvement of the human RSC in heading computation based on visual, vestibular, and proprioceptive input and implicate revisiting traditional findings of alpha desynchronization in areas of the navigation network during spatial orientation in movement-restricted participants.

-

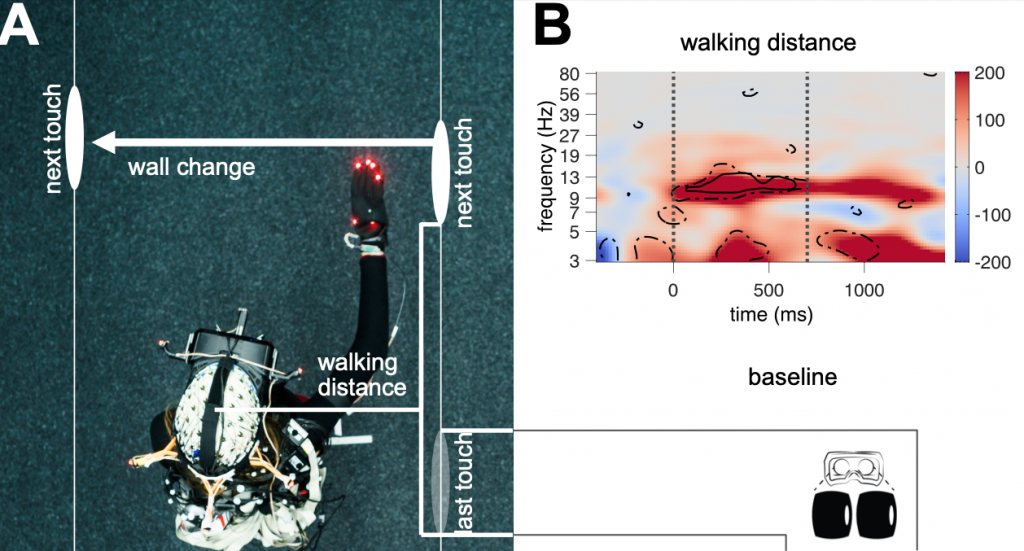

Single-trial regression of spatial exploration behavior indicates posterior EEG alpha modulation to reflect egocentric codingLukas Gehrke, and Klaus GramannEuropean Journal of Neuroscience, Apr 2021

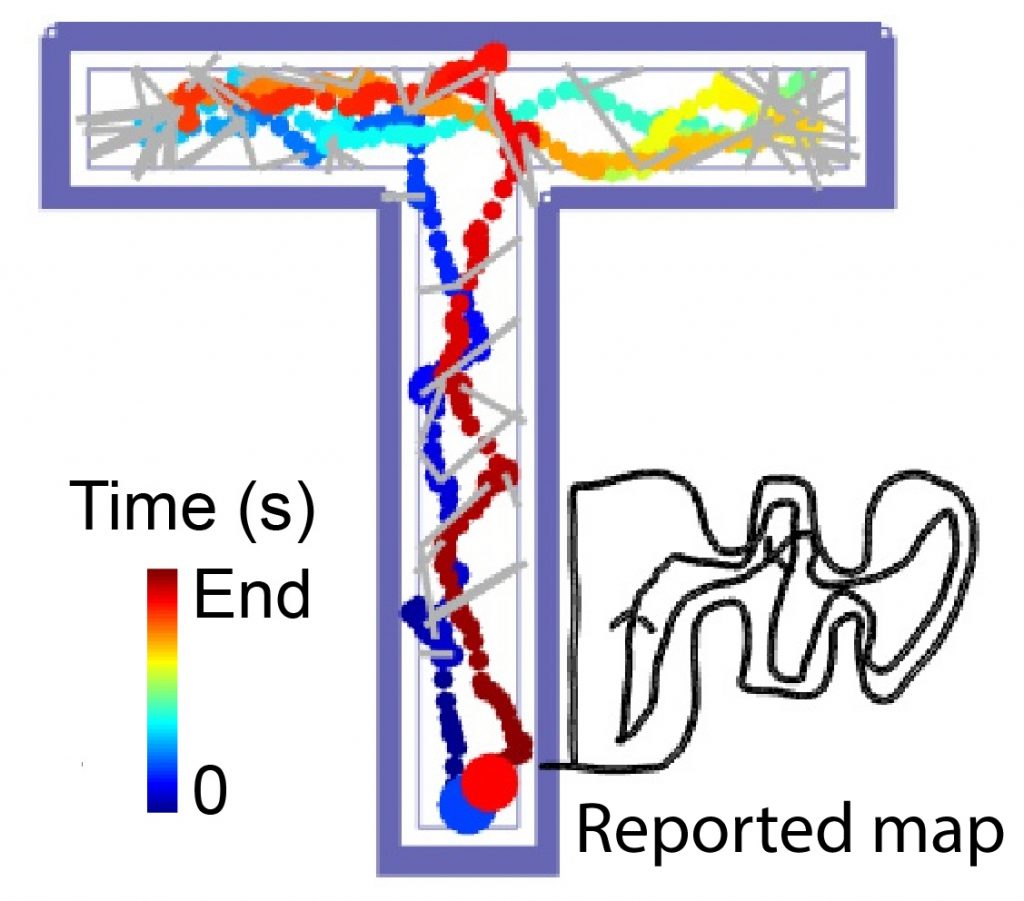

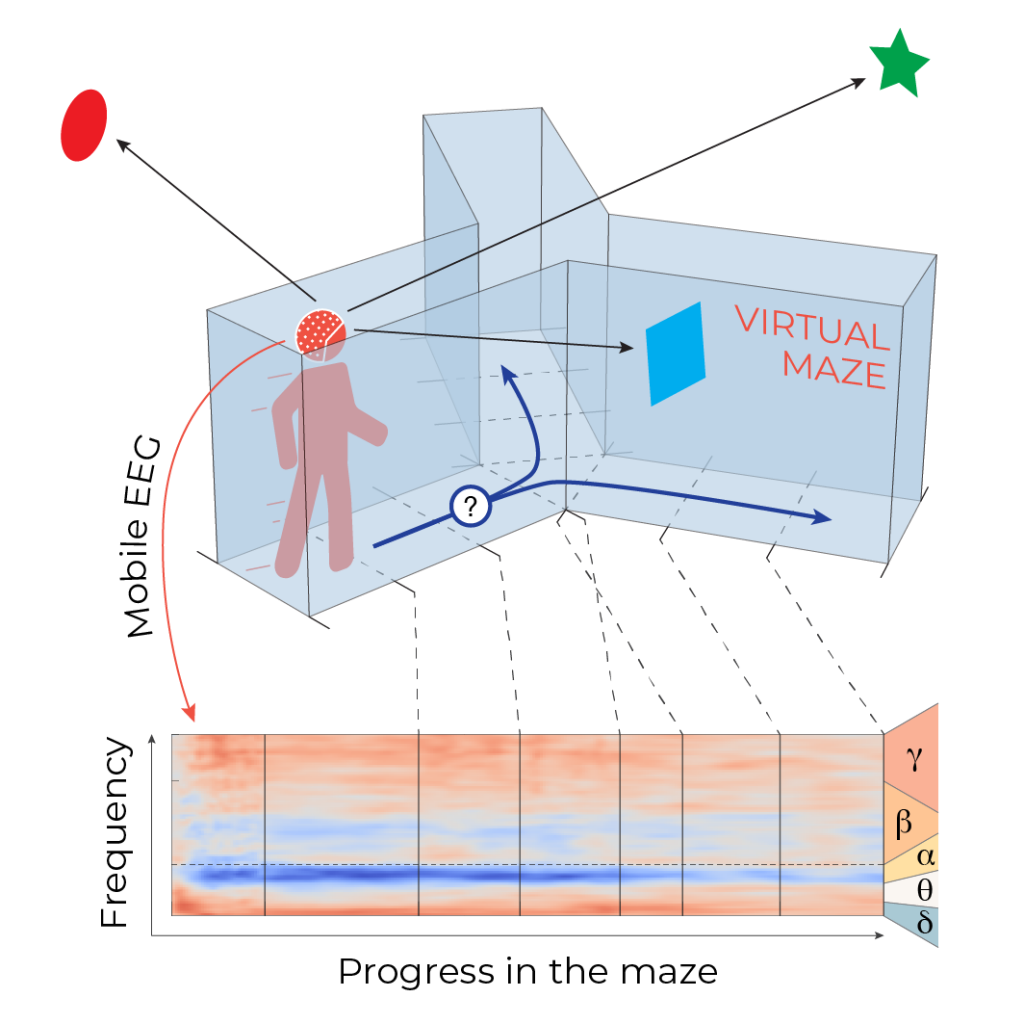

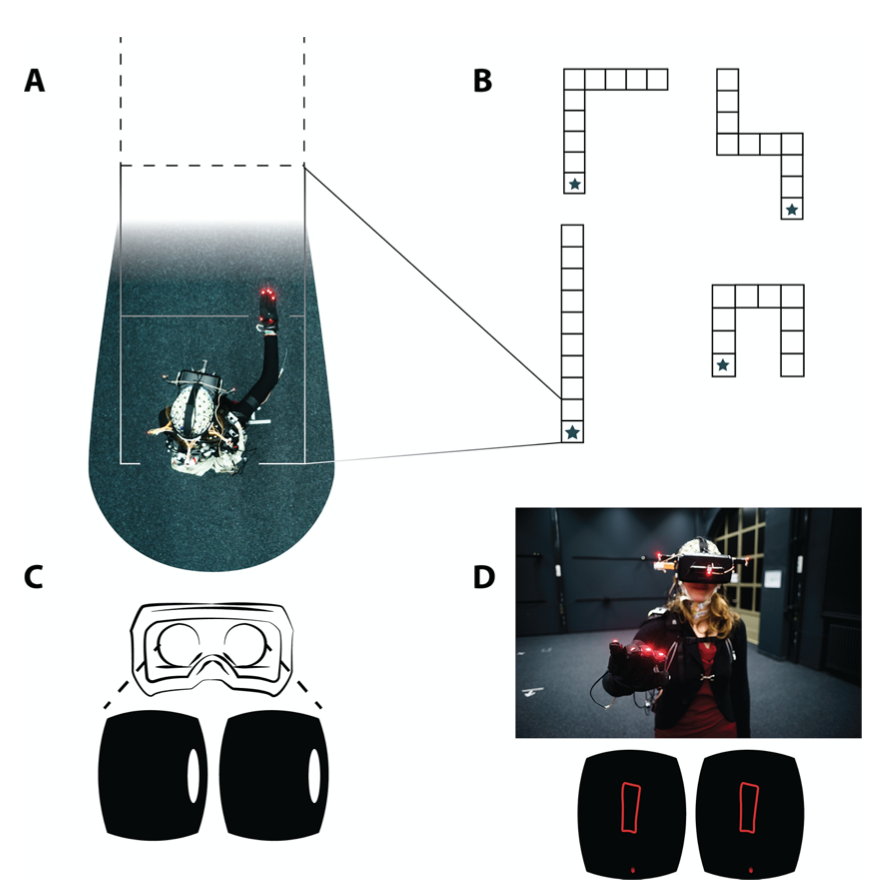

Single-trial regression of spatial exploration behavior indicates posterior EEG alpha modulation to reflect egocentric codingLukas Gehrke, and Klaus GramannEuropean Journal of Neuroscience, Apr 2021Learning to navigate uncharted terrain is a key cognitive ability that emerges as a deeply embodied process, with eye movements and locomotion proving most useful to sample the environment. We studied healthy human participants during active spatial learning of room-scale virtual reality (VR) mazes. In the invisible maze task, participants wearing a wireless electroencephalography (EEG) headset were free to explore their surroundings, only given the objective to build and foster a mental spatial representation of their environment. Spatial uncertainty was resolved by touching otherwise invisible walls that were briefly rendered visible inside VR, similar to finding your way in the dark. We showcase the capabilities of mobile brain/body imaging using VR, demonstrating several analysis approaches based on general linear models (GLMs) to reveal behavior-dependent brain dynamics. Confirming spatial learning via drawn sketch maps, we employed motion capture to image spatial exploration behavior describing a shift from initial exploration to subsequent exploitation of the mental representation. Using independent component analysis, the current work specifically targeted oscillations in response to wall touches reflecting isolated spatial learning events arising in deep posterior EEG sources located in the retrosplenial complex. Single-trial regression identified significant modulation of alpha oscillations by the immediate, egocentric, exploration behavior. When encountering novel walls, as well as with increasing walking distance between subsequent touches when encountering novel walls, alpha power decreased. We conclude that these oscillations play a prominent role during egocentric evidencing of allocentric spatial hypotheses.

-

The AudioMaze: An EEG and motion capture study of human spatial navigation in sparse augmented realityMakoto Miyakoshi, Lukas Gehrke, Klaus Gramann, and 2 more authorsEuropean Journal of Neuroscience, Apr 2021

The AudioMaze: An EEG and motion capture study of human spatial navigation in sparse augmented realityMakoto Miyakoshi, Lukas Gehrke, Klaus Gramann, and 2 more authorsEuropean Journal of Neuroscience, Apr 2021Spatial navigation is one of the fundamental cognitive functions central to survival in most animals. Studies in humans investigating the neural foundations of spatial navigation traditionally use stationary, desk-top protocols revealing the hippocampus, parahippocampal place area (PPA), and retrosplenial complex to be involved in navigation. However, brain dynamics, while freely navigating the real world remain poorly understood. To address this issue, we developed a novel paradigm, the AudioMaze, in which participants freely explore a room-sized virtual maze, while EEG is recorded synchronized to motion capture. Participants (n = 16) were blindfolded and explored different mazes, each in three successive trials, using their right hand as a probe to “feel” for virtual maze walls. When their hand “neared” a virtual wall, they received directional noise feedback. Evidence for spatial learning include shortening of time spent and an increase of movement velocity as the same maze was repeatedly explored. Theta-band EEG power in or near the right lingual gyrus, the posterior portion of the PPA, decreased across trials, potentially reflecting the spatial learning. Effective connectivity analysis revealed directed information flow from the lingual gyrus to the midcingulate cortex, which may indicate an updating process that integrates spatial information with future action. To conclude, we found behavioral evidence of navigational learning in a sparse-AR environment, and a neural correlate of navigational learning was found near the lingual gyrus.

-

Mobile brain/body imaging of landmark-based navigation with high-density EEGAlexandre Delaux, Jean-Baptiste Saint Aubert, Stephen Ramanoël, and 7 more authorsEuropean Journal of Neuroscience, Apr 2021

Mobile brain/body imaging of landmark-based navigation with high-density EEGAlexandre Delaux, Jean-Baptiste Saint Aubert, Stephen Ramanoël, and 7 more authorsEuropean Journal of Neuroscience, Apr 2021Coupling behavioral measures and brain imaging in naturalistic, ecological conditions is key to comprehend the neural bases of spatial navigation. This highly integrative function encompasses sensorimotor, cognitive, and executive processes that jointly mediate active exploration and spatial learning. However, most neuroimaging approaches in humans are based on static, motion-constrained paradigms and they do not account for all these processes, in particular multisensory integration. Following the Mobile Brain/Body Imaging approach, we aimed to explore the cortical correlates of landmark-based navigation in actively behaving young adults, solving a Y-maze task in immersive virtual reality. EEG analysis identified a set of brain areas matching state-of-the-art brain imaging literature of landmark-based navigation. Spatial behavior in mobile conditions additionally involved sensorimotor areas related to motor execution and proprioception usually overlooked in static fMRI paradigms. Expectedly, we located a cortical source in or near the posterior cingulate, in line with the engagement of the retrosplenial complex in spatial reorientation. Consistent with its role in visuo-spatial processing and coding, we observed an alpha-power desynchronization while participants gathered visual information. We also hypothesized behavior-dependent modulations of the cortical signal during navigation. Despite finding few differences between the encoding and retrieval phases of the task, we identified transient time–frequency patterns attributed, for instance, to attentional demand, as reflected in the alpha/gamma range, or memory workload in the delta/theta range. We confirmed that combining mobile high-density EEG and biometric measures can help unravel the brain structures and the neural modulations subtending ecological landmark-based navigation.

2019

-

Neurofeedback during creative expression as a therapeutic toolStephanie M Scott, and Lukas GehrkeMobile brain-body imaging and the neuroscience of art, innovation and creativity, Apr 2019

Neurofeedback during creative expression as a therapeutic toolStephanie M Scott, and Lukas GehrkeMobile brain-body imaging and the neuroscience of art, innovation and creativity, Apr 2019Engaging users within therapeutic and rehabilitative trainings is a challenge towards sparking, and maintaining motivation. We explored how electroencephalographic (EEG) signals may be used to engage patients, and promote creative rehabilitation and therapeutic interventions. We introduce a proof-of-concept measuring EEG during therapeutic drawing to adapt an interactive canvas online.

-

Detecting visuo-haptic mismatches in virtual reality using the prediction error negativity of event-related brain potentialsLukas Gehrke, Sezen Akman, Pedro Lopes, and 5 more authorsIn Proceedings of the 2019 CHI conference on human factors in computing systems , Apr 2019

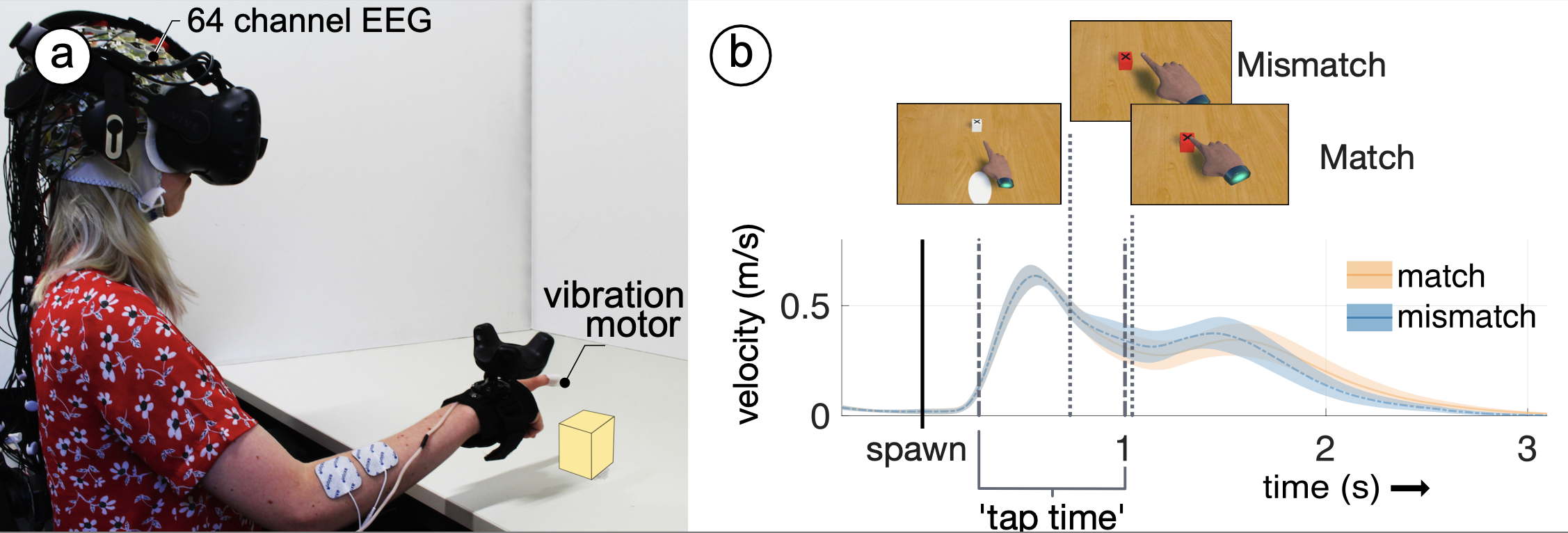

Detecting visuo-haptic mismatches in virtual reality using the prediction error negativity of event-related brain potentialsLukas Gehrke, Sezen Akman, Pedro Lopes, and 5 more authorsIn Proceedings of the 2019 CHI conference on human factors in computing systems , Apr 2019Designing immersion is the key challenge in virtual reality; this challenge has driven advancements in displays, rendering and recently, haptics. To increase our sense of physical immersion, for instance, vibrotactile gloves render the sense of touching, while electrical muscle stimulation (EMS) renders forces. Unfortunately, the established metric to assess the effectiveness of haptic devices relies on the user’s subjective interpretation of unspecific, yet standardized, questions. Here, we explore a new approach to detect a conflict in visuo-haptic integration (e.g., inadequate haptic feedback based on poorly configured collision detection) using electroencephalography (EEG). We propose analyzing event-related potentials (ERPs) during interaction with virtual objects. In our study, participants touched virtual objects in three conditions and received either no haptic feedback, vibration, or vibration and EMS feedback. To provoke a brain response in unrealistic VR interaction, we also presented the feedback prematurely in 25% of the trials. We found that the early negativity component of the ERP (so called prediction error) was more pronounced in the mismatch trials, indicating we successfully detected haptic conflicts using our technique. Our results are a first step towards using ERPs to automatically detect visuo-haptic mismatches in VR, such as those that can cause a loss of the user’s immersion.

-

Extracting Motion-Related Subspaces from EEG in Mobile Brain/Body Imaging Studies using Source Power ComodulationLukas Gehrke, Luke Guerdan, and Klaus GramannIn 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER) , Apr 2019

Extracting Motion-Related Subspaces from EEG in Mobile Brain/Body Imaging Studies using Source Power ComodulationLukas Gehrke, Luke Guerdan, and Klaus GramannIn 2019 9th International IEEE/EMBS Conference on Neural Engineering (NER) , Apr 2019Mobile Brain/Body Imaging (MoBI) is an emerging non-invasive approach to investigate human brain activity and motor behavior associated with cognitive processes in natural conditions. MoBI studies and analyses pipelines combine brain measurements, e.g. Electroencephalography (EEG), with motion data as participants conduct tasks with near-natural behavior. Within the field however, standard source decomposition and reconstruction pipelines largely rely on unsupervised blind source separation (BSS) approaches and do not consider movement information to guide the decomposition of oscillatory brain sources. We propose the use of a supervised spatial filtering method, Source Power Co-modulation (SPoC), for extracting source components that co-modulate with body motion. Further, we introduce a method to validate the quality of oscillatory sources in MoBI studies. We illustrate the approach to investigate the link between hand and head movement kinematics and power dynamics of EEG sources while participants explore an invisible maze in virtual reality. Stable oscillatory source envelopes correlating with hand and head motion were isolated in all subjects, with median ρ = .13 for all sources and median ρ = .16 for sources passing the selection criteria. The results indicate that it is possible to improve movement related source separation to further guide our understanding of how movement and brain dynamics interact.

- MoBI—Mobile brain/body imagingEvelyn Jungnickel, Lukas Gehrke, Marius Klug, and 1 more authorNeuroergonomics, Apr 2019

Mobile brain/body imaging (MoBI) is an integrative multimethod approach used to investigate human brain activity, motor behavior, and other physiological data associated with cognitive processes that involve active behavior. This chapter reviews the basic principles behind MoBI, recording instrumentation, and best practice of different processing and analyses approaches. The focus is on electroencephalography as the only portable method to image the human brain with sufficient temporal resolution to investigate fine-grained subsecond-scale cognitive processes. The chapter shows how controlled and modifiable experimental environments can be used to investigate natural cognition and active behavior in a wide range of applications in neuroergonomics and beyond.

2018

-

The invisible maze task (IMT): interactive exploration of sparse virtual environments to investigate action-driven formation of spatial representationsLukas Gehrke, John R Iversen, Scott Makeig, and 1 more authorIn Spatial Cognition XI: 11th International Conference, Spatial Cognition 2018, Tübingen, Germany, September 5-8, 2018, Proceedings 11 , Apr 2018

The invisible maze task (IMT): interactive exploration of sparse virtual environments to investigate action-driven formation of spatial representationsLukas Gehrke, John R Iversen, Scott Makeig, and 1 more authorIn Spatial Cognition XI: 11th International Conference, Spatial Cognition 2018, Tübingen, Germany, September 5-8, 2018, Proceedings 11 , Apr 2018The neuroscientific study of human navigation has been constrained by the prerequisite of traditional brain imaging studies that require participants to remain stationary. Such imaging approaches neglect a central component that characterizes navigation - the multisensory experience of self-movement. Navigation by active movement through space combines multisensory perception with internally generated self-motion cues. We investigated the spatial microgenesis during free ambulatory exploration of interactive sparse virtual environments using motion capture synchronized to high resolution electroencephalographic (EEG) data as well AS psychometric and self-report measures. In such environments, map-like allocentric representations must be constructed out of transient, egocentric first-person perspective 3-D spatial information. Considering individual differences of spatial learning ability, we studied if changes in exploration behavior coincide with spatial learning of an environment. To this end, we analyzed the quality of sketch maps (a description of spatial learning) that were produced after repeated learning trials for differently complex maze environments. We observed significant changes in active exploration behavior from the first to the last exploration of a maze: a decrease in time spent in the maze predicted an increase in subsequent sketch map quality. Furthermore, individual differences in spatial abilities as well as differences in the level of experienced immersion had an impact on the quality of spatial learning. Our results demonstrate converging evidence of observable behavioral changes associated with spatial learning in a framework that allows the study of cortical dynamics of navigation.